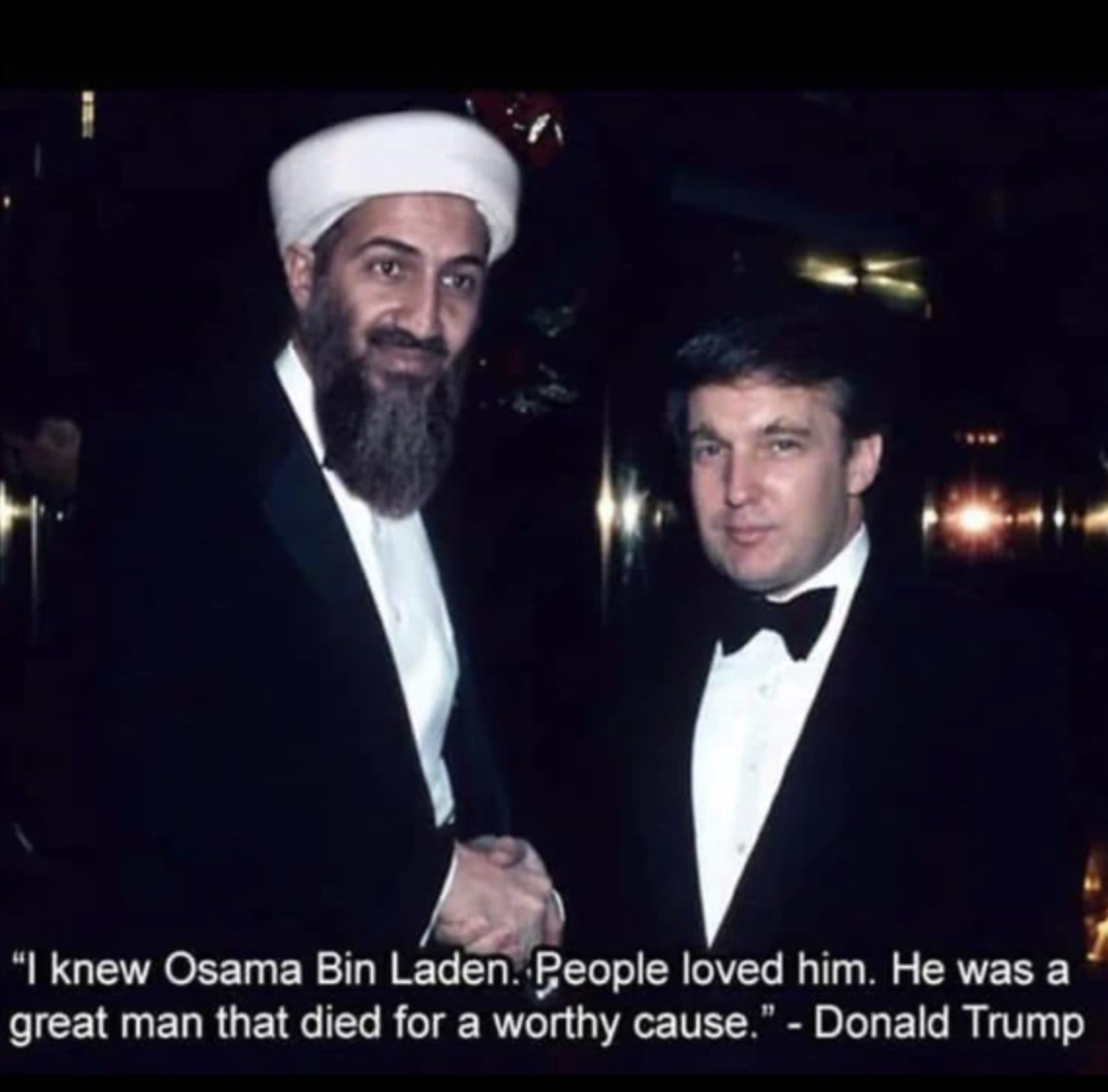

The image purportedly shows US President Donald Trump in his younger

days, shaking hands with global terrorist Osama Bin Laden. It had gone

viral during the 2020 US presidential election. The picture also has a

quote superimposed on it, praising Laden, which is attributed to

Trump.

The image purportedly shows US President Donald Trump in his younger

days, shaking hands with global terrorist Osama Bin Laden. It had gone

viral during the 2020 US presidential election. The picture also has a

quote superimposed on it, praising Laden, which is attributed to

Trump.

Factify5WQA - Please visit this link for details.